by Dirk Helbing & Peter Seele

Big data, artificial intelligence, and digital technologies have left us surprisingly ill-equipped for the challenges now facing us, such as climate change and the COVID-19 pandemic. Building resilience and flexibility – the hallmarks of sustainable systems – into policymaking and international cooperation is a more promising approach.

Our geological epoch, the Anthropocene, in which mankind is shaping the fate of the planet, is characterized by existential threats. Some are addressed in action plans such as the UN’s Sustainable Development Goals. But we seem to be caught between knowing that we should change our behavior and our entrenched habits.

In an overpopulated world, many have asked, “What is the value of human life?” The COVID-19 pandemic has raised this issue once more, framing the question in stark terms: Who should die first if there are not enough resources to save everyone?

Many science-fiction novels, such as Frank Schätzing’s The Tyranny of the Butterfly, deal with similar concerns, often “solving” the sustainable-development problem in cruel ways that echo some of the darkest chapters in human history. And reality is not far behind. It is tempting to think we can rely on artificial intelligence to help us navigate such dilemmas. The subjects of depopulation and computer-based euthanasia have been under discussion, and AI is already being used to help triage COVID-19 patients.

But should we let algorithms make life-and-death decisions? Consider the famous trolley problem. In this thought experiment, if one does nothing, several people will be crushed by a runaway trolley car. If one switches the trolley onto another track, fewer people will die, but one’s intervention will kill them.

It has been suggested that the problem is about saving lives, but in fact it asks: “If not everyone can survive, who has to die?” Still, lesser evils are still evils. Once we start finding them acceptable, shocking questions are bound to follow, which can undermine the very foundations of our society and human dignity. For example, if an autonomous vehicle cannot brake quickly enough, should it kill a grandmother or an unemployed person?

Similar questions were asked as part of the so-called Moral Machine experiment, which collected data on ethical preferences in situations involving autonomous vehicles from participants worldwide, with the researchers discussing “how these preferences can contribute to developing global, socially acceptable principles for machine ethics.” But such experiments are not a suitable basis for policymaking.

People would prefer an algorithm that is fair. Potentially, this would mean making random decisions. Of course, we do not want to suggest that people should be killed randomly – or at all. This would contradict the fundamental principle of human dignity, even if the death were painless. Rather, our thought experiment suggests that we should not accept the framework of the trolley problem as given. If it produces unacceptable solutions, we should undertake greater collective efforts to change the setting. When it comes to autonomous vehicles, for example, we could drive more slowly or equip cars with better brakes and other safety technology.

Likewise, society’s current sustainability issues were not predetermined, but were caused by our way of doing business, our economic infrastructure, our concept of international mobility, and conventional supply-chain management. The real question should be why – nearly 50 years after the publication of the eye-opening Limits to Growth study – we still don’t have a circular, sharing economy. And why were we unprepared for a pandemic, an event which had been widely predicted?

Big data, artificial intelligence, and digital technologies have left us surprisingly ill-equipped for the challenges now facing us, be it climate change, the COVID-19 pandemic, fake news, hate speech, or even cyber security. The explanation is simple: While it sounds good to “optimize” the world using data, the optimization is based on a one-dimensional goal function that maps the complexity of the world to a single index. This is neither appropriate nor effective, and largely neglects the potential of immaterial network effects. It also underestimates human problem-solving abilities and the world’s carrying capacity.

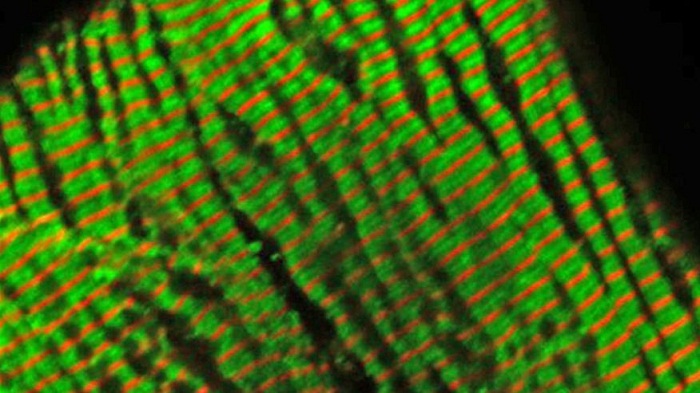

Nature, by contrast, does not optimize; it co-evolves. It performs much better than human society in terms of sustainability and circular supply networks. Both our economy and our society could benefit from bio-inspired solutions that resemble ecosystems, particularly symbiotic ones.

This means reorganizing our troubled world and building resilience and flexibility into policymaking and international cooperation. These hallmarks of sustainable systems are crucial for adapting to and recovering from shocks, disasters, and crises, such as those we face today.

Resilience can be increased in several ways, including redundancies, a diversity of solutions, decentralized organization, participatory approaches, solidarity, and, where appropriate, digital assistance. Such solutions should be locally sustainable for extended periods of time. In other words, rather than “learning to die in the Anthropocene,” as suggested by author Roy Scranton, we should “learn to live” in our current troubled times. This is the best insurance against developments that could force us into the moral quagmire of triaging human lives.

US President-elect Joe Biden may have promised a “return to normalcy,” but the truth is that there is no going back. The world is changing in fundamental ways, and the actions the world takes in the next few years will be critical to lay the groundwork for a sustainable, secure, and prosperous future.

Dirk Helbing is Professor of Computational Social Science at ETH Zürich.

Peter Seele is Professor of Business Ethics at USI Lugano.

Read the original article on project-syndicate.org.

More about: ALGORITHM